LLMs for our teams

Discover how we’re using self-hosted LLMs at Axioned to boost team productivity while ensuring security and privacy. Innovation meets responsibility with Open WebUI.

In late November 2022, the world witnessed a significant shift when ChatGPT was opened to the public. It marked one of the most transformative moments in recent technology—perhaps only rivaled by the advent of the internet and cloud computing. This development has the potential to shape the future in ways we’re just beginning to understand.

Over the past two years, we’ve seen a wide range of opinions about large language models (LLMs). Some claim, “this is the future,” while others argue, “it’s just regurgitating information”, and of course, “It can’t even count how many R’s are in ‘strawberry.’” It’s true—LLMs do some things remarkably well and others, not so much, but they always appear to do so with confidence.

Personally, I view these tools as learning companions—sometimes acting like a junior researcher, a generalist, or an assistant. Yes, they can be naive or overeager, but they work fast. You can delegate a wide range of tasks to them, and much like working with human colleagues, your effectiveness depends on how you manage and guide them.

At Axioned, we’ve been exploring how to best leverage LLMs in our teams. Over the past year, we conducted several surveys, both structured and informal, to gauge their impact and potential. A common concern we encountered—either implicitly or explicitly—was around security and privacy. This is particularly critical in the technology services space, where sensitive data is a daily concern. In response, we published Generative AI Guidelines in our company handbook to encourage responsible use while addressing these concerns.

However, we felt there was more to be done. When building solutions for clients, we always prioritize security and privacy, leveraging best-in-class tools. So, we needed a way for our teams to experiment safely with LLMs in a controlled environment—without compromising our standards.

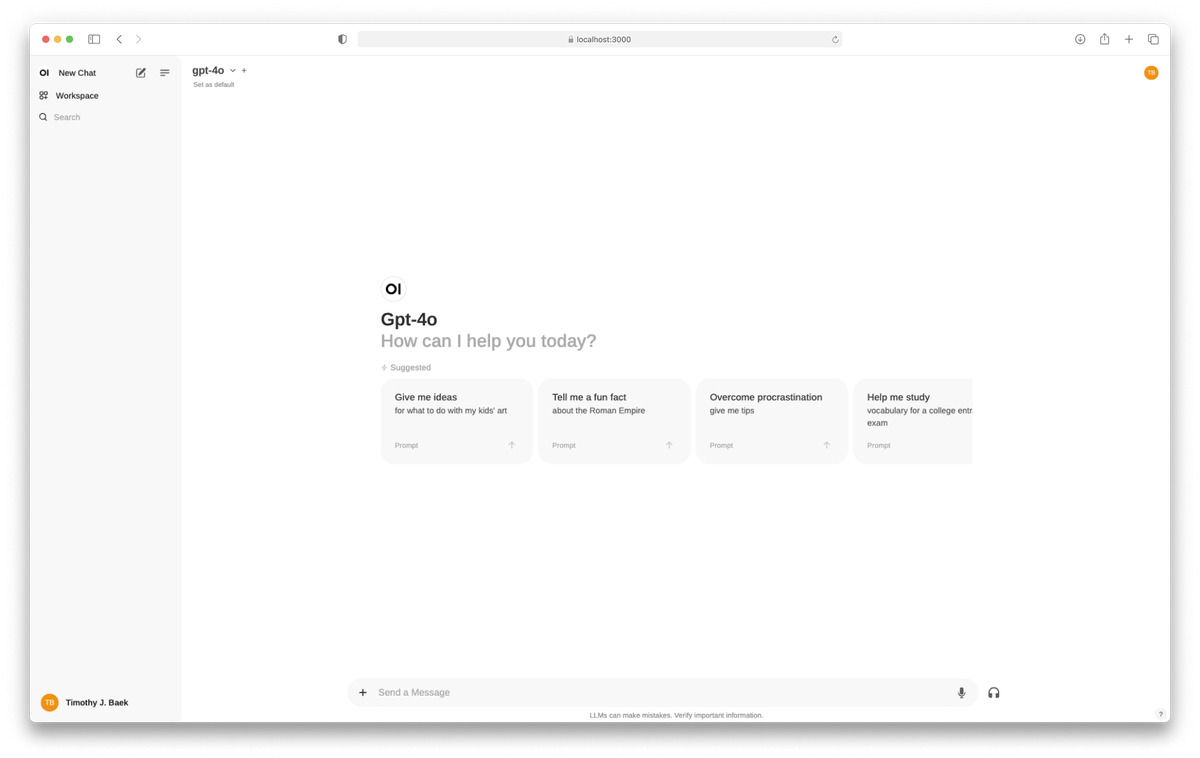

That’s where Open WebUI comes in. This platform allows us to self-host a private instance of LLMs, giving our teams a safe space to interact with them. With data shared through OpenAI’s APIs not being used to train their models (thanks to enterprise-level privacy protections), as well as self-hosting other models ourselves through Ollama, we can provide access to the latest models, centralized prompts, and more. It’s the perfect balance between innovation and security.

It allowed us to be able to adopt and open up these technologies to our teams quicker and in a much more cost efficient way (as compared to ChatGPT for teams) - especially in a environment there is so much innovation and we don't want to be locked into one model, platform or vendor.

Since adopting Open WebUI, we’ve seen increased usage and confidence in working with LLMs in-house. Providing this safe, private environment has empowered our teams to explore and experiment freely, without the limitations or concerns that typically accompany third-party platforms. It’s not just about removing usage caps; it’s about fostering a culture of responsible innovation.

At Axioned, we believe that LLMs are not replacing anyone—they’re enhancing what we can achieve together. And by putting the right safeguards in place, we’re ensuring that our teams can use these tools to their fullest potential, unlocking new possibilities for us and for our clients.