How We're Using AI at Axioned (And How You Can Too!)

At Axioned, we treat AI like a teammate—collaborating with it to boost productivity across design, dev, QA, and PM. Using a 5-step framework, we give context, iterate, and refine—safely and smartly. Want to work with an AI-enabled team? Let’s talk.

Most people prompt AI like they’re searching for something on Google: short commands, no context, and the hope that they/it will find exactly what they’re looking for in “one shot”.

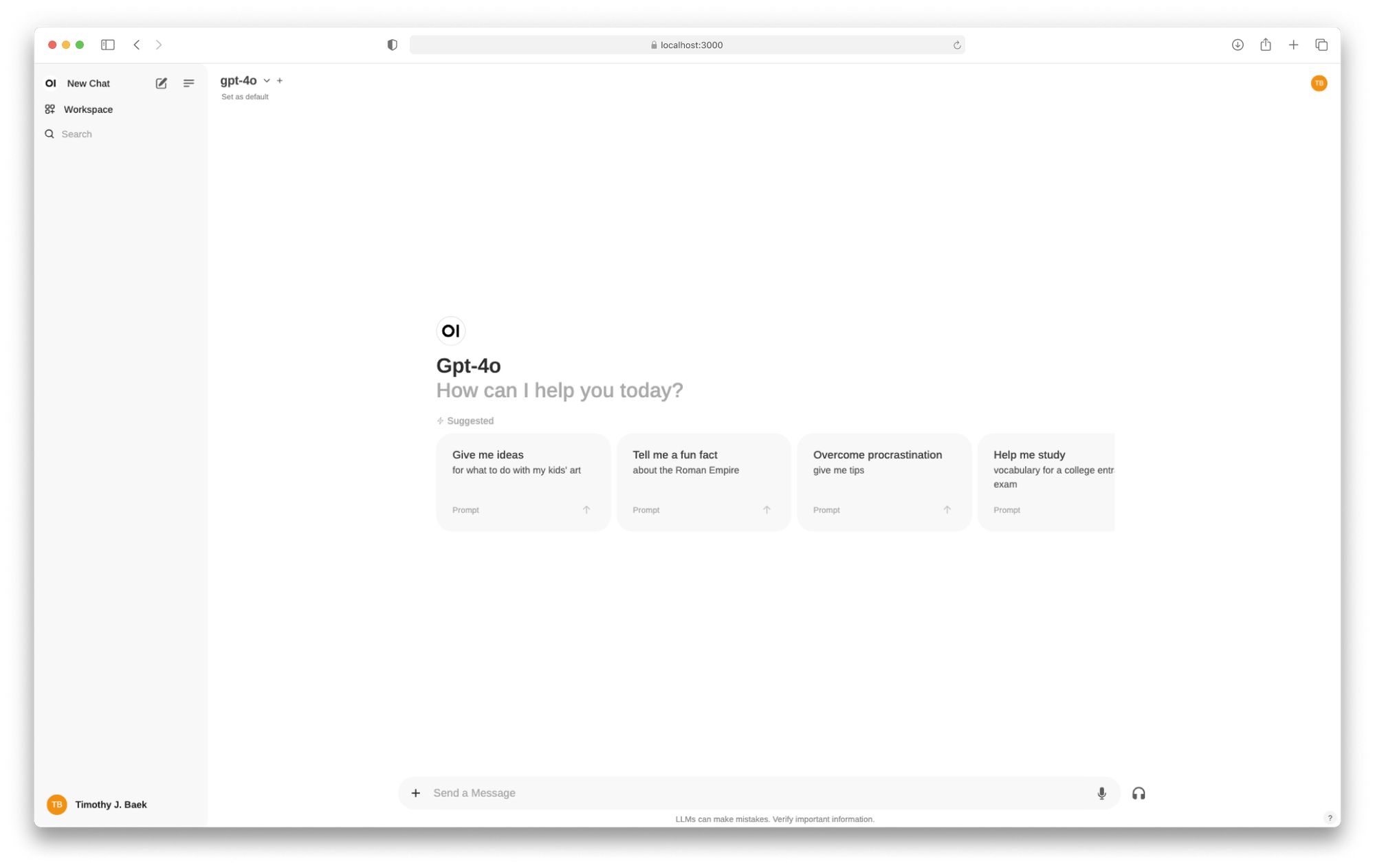

In many cases, this results in your AI tool of choice (frequently ChatGPT, Claude, etc.) providing an answer that might sound close to what you were looking for... However, it’s a recipe for hallucinations and inaccuracies. The type that people complain about when using AI.

The biggest leaps in productivity come when we stop treating AI like a tool and start treating it like a teammate.

"Teammate, not technology, should be a mantra amongst any team or organization that wants to outperform with AI." ~Jeremy Utley, adjunct professor at Stanford University, and co-author of Ideaflow: The Only Business Metric That Matters.

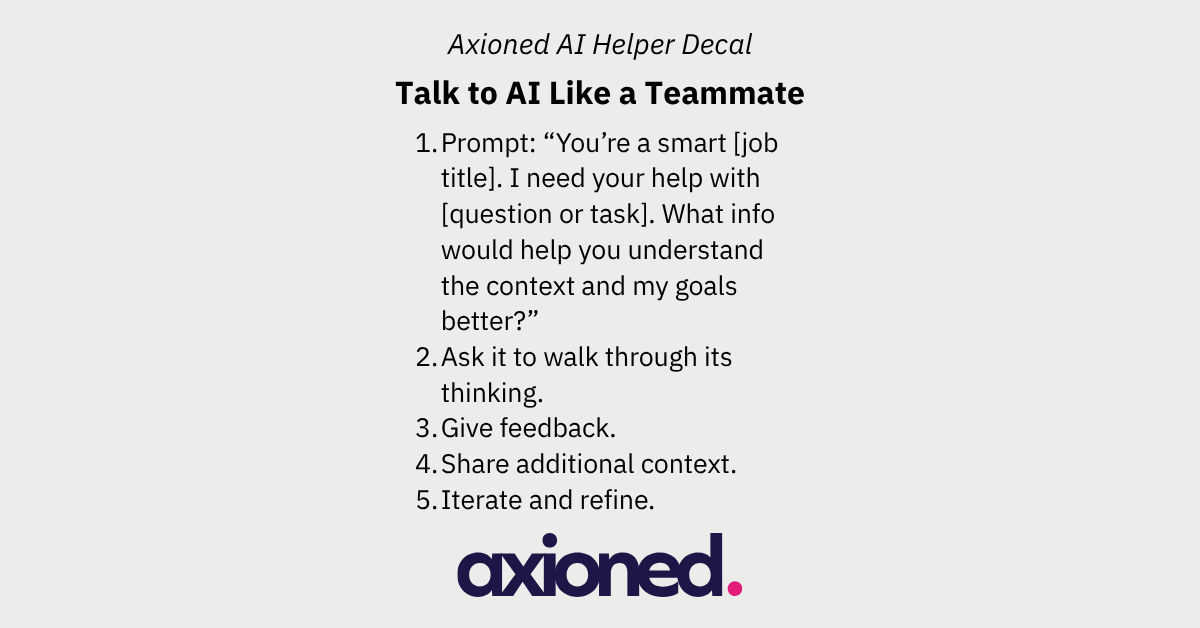

Talk to AI Like A Teammate - A 5 Step Framework

Originally shared by Jeremy Utley, this model is reframing how we interact with AI in our day-to-day activities at Axioned:

1. Prompt: “You’re a smart [job title]. I need your help with [question or task]. What info would help you understand the context and my goals better?”

Here’s an example that Jeremy shared:

"You’re a gifted document analyst. I need your help summarizing this document. Before you begin, please let me know what additional information would help you understand the context and my goals better."

Just like your teammate, AI needs to understand the context you’re dealing with and your end goal.

And by asking it/AI to tell you what else it needs - in terms of context and goal information - you are positioning it to become a true collaborator.

2. Ask it to walk through its thinking

When you ask AI to walk through its reasoning with you, you get better results - just like you would with a teammate. In hearing its answers, you can make corrections and/or redirect - again like you would to a teammate.

3. Give feedback

If the response was or wasn’t what you were looking for, give honest feedback - again, like a fellow teammate would want from you.

4. Share additional context

Finally, just as you shared context at the beginning - continue to provide additional context as the conversation continues.

5. Iterate and refine

Work with your “AI teammate” to iterate and refine your “solution” until you’ve gotten something you feel 101% comfortable with.

If you’re not sure of how well YOU can judge your AI teammate’s “solution”, then perhaps add the prompt:

"Would [job title of the person who will judge your work] find this an acceptable solution?"

OR

"Would [job title of someone whose opinion you’d value] find this an acceptable solution?"

Then repeat step 2 onwards.

Axioned Use Cases

Some recent quotes gathered from our team of developers, testers, UX/UI designers and project managers:

"Tools like Stitch with Google help me rapidly generate wireframes and brainstorm early ideas. I also use Whimsical AI to convert user journey text into flow diagrams. Some of the AI image generators are also great for visual storytelling. Figma’s AI helps with contextual design assistance, and Attention Insight can analyze user engagement and optimize my designs." ~Axioned Designer

"ChatGPT and Cursor are my go-to problem-solving buddies. Whether I’m debugging tricky issues or exploring unfamiliar territory, AI gives me a faster and more creative way to approach problems in my day-to-day life as a developer and engineer." ~Axioned Developer

"I use it to better understand client requests so I can guide my team effectively. I want to make sure I ask the right questions and key implementation details aren’t missed. Beyond that, AI tools have become my go-to support for refining drafts and structuring key documents like project briefs, Statements of Work (SOWs), and Standard Operating Procedures (SOPs). They’re also great for summarizing long meetings into clear, concise notes, and highlighting key discussion points and action items." ~Axioned Project Manager

"I use AI like a second brain. Even with my years of QA experience it still helps me to structure complex test scenarios or validate my approach. For example, when testing a user registration flow or similar features, I ask AI to list possible edge cases to ensure I don’t miss anything important." ~Axioned QA/Tester

For more re: Axioned's experiments with AI tech, read more here:

Axioned’s AI Safety Tips

Axioned supports responsible experimentation with generative AI tools, but it’s essential to consider information security, data privacy, and compliance when using them. We’ve written about this and published “starter” guidelines in our public-facing handbook, here: Axioned's Handbook

Some key practices to keep in mind:

1 - Anonymize or remove sensitive information. If unsure; hesitate and ask for permission first.

2 - Actively opt out of the setting that "improves the model for everyone". Turn that sh!t off!

In GitHub, go to your Settings → Code, planning, and automation → Copilot and deselect: “Allow GitHub to use my code snippets from the code editor for product improvements.” This prevents your code from being used to further train GitHub Copilot.

Check similar settings in any AI tool you use. Most generative AI tools include an option to opt out of sharing your inputs/data to improve their models. These settings are often hidden under:

- Privacy, Data Sharing, or Settings → Labs/Beta/Experiments

- Labels like: “Help improve [Tool Name]”, “Share usage data”, or “Allow training on conversations”

When in doubt, search for “improve” or “training” in the settings, and opt out. If you’re unsure whether a tool is logging or sharing data, play it safe and assume it might be, until you confirm otherwise.

Excited to work with a team that’s actively embracing AI across everything we do? That’s us - Axioned.

Let’s chat! Book a call with Libby or Tim to explore how Axioned can help.